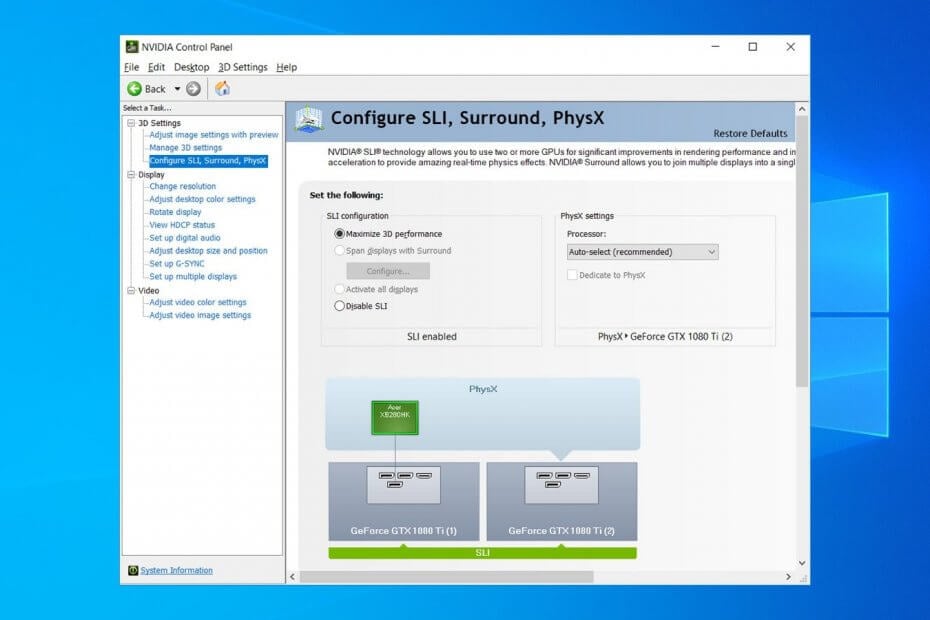

We see a 3-Watt difference between GPUz Power Consumption numbers between Optimal Power, Maximum Performance and Adaptive. It goes from 58W up to 91W, a very big difference for sure. We also see a big difference in Idle power when using Prefer Maximum Performance.

It’s not really much, but the RTX 2080 SUPER was a lot closer in differences. We do see a 2-degree difference though between Optimal Power and Prefer Maximum Performance. The GeForce RTX 2060 SUPER results are mostly similar. Using Optimal Power and Adaptive over Prefer Maximum Performance will indeed help you a great deal on Idle power. It appears that on this video card the only figure that was affected was the Idle Wattage. Then we look at the green bar which is the peak total system Wattage it also looks the same. It also all looks the same between the power modes. Next, we have the yellow bar which is the GPUz board power. However, at Prefer Maximum Performance it skyrockets to 103W just sitting there idle. In Optimal Power and Adaptive, it is similar at 61W. However, the power modes do directly affect the blue bar, the total system Idle Wattage. We see no differences in GPU temperature between the different power modes, none of them seemed to affect the GPU temperature at full-load while gaming. The orange bar represents GPU Temperature. Let’s start at the bottom and work our way up. Finally, the orange bar at the bottom represents the GPU Temperature in Celsius. The blue bar below that represents the Idle total system Wattage. The yellow bar below that one represents the GPUz Power Consumption board power result. The green bar at the top will represent the total system Wattage at full-load. We are going to show one graph per video card that contains all the temperature and Wattage information in one place.

#NVIDIA CONTROL PANEL POWER MANAGEMENT MODE FOR LESS POWER UPDATE#

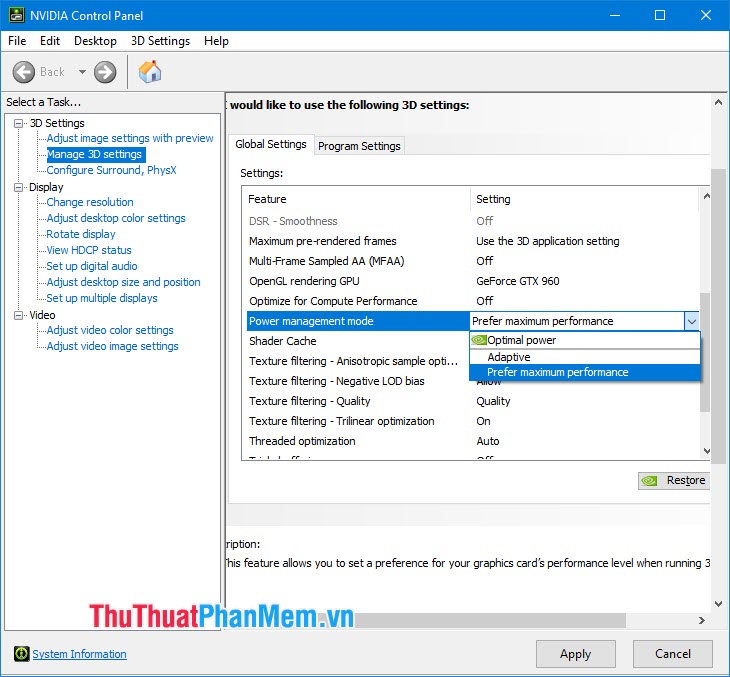

I then realised that after my recent driver update the Nvidia control panel power management mode was set to Optimal power rather than my usual Prefer maximum.Now we have the important power and temperature comparisons. Normally I would see the GPU Core clock speed at 1980 Mhz all the time and the GPU Core % around 80-85% & temps about 70C in that type of scenery.Īs you can see it shows the core running at 1230Mhz with 95% and the temps down at 53C. Taking a look at Open hardware monitor I saw thisįor those of you not familair with open hardware monitor, the first column is the current value, the 2nd column the minimum and the 3rd column the Max value recorded. I thought maybe it was due to the amounts of cloud being rendered etc… But when I finally flew into some clear areas with only a few clouds on the horizon it was still showing 95-99%. I was up at 11,000 feet with live weather on with lots of clouds. Today I was flying along over the Swiss alps in my Cessna Caravan and noticed my GPU was pegged at 95-99% core usage. I know a lot of people advocate running GPU at 100% but I don’t agree with that, and prefer to have a little bit of spare capacity for when I go into more demanding areas and it keeps my GPU about 7C lower in temp than 100%. This usually sees me running with about 80-85% GPU core usage and temps about 65-75C. I usually have my FPS limited to 33fps (more recently at 40fps with last Nvidia driver) in the Nvidia control panel and have Vsync set to fast in there also.

I keep a close eye on my CPU & GPU performance (using open hardware monitor software) after trying a bit of tweaking or after driver updates etc…

Like many suggestions I’ve seen in posts elsewhere on this forum I’ve always had it set to Prefer maximum performance. I’ve just been experimenting with the Power managment mode in the Nvidia control panel.īear with me on this one, it’s a lengthy example but I’d welcome any thoughts on this, and it may be of help to some of you.

0 kommentar(er)

0 kommentar(er)